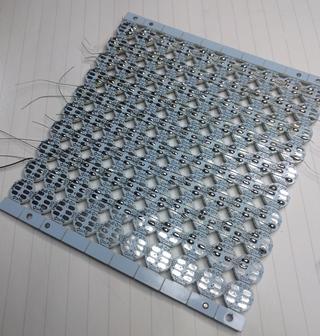

It all started more than a week ago when I was given 10x10 panel of ws2812 leds designed to be broken apart into individual boards. I might have said at that moment: "It is a panel, it's just missing a few wires", and so this story begins...

It took me the whole day to add those few wires and turn it into panel.

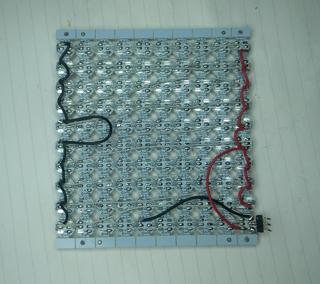

I started testing it using Arduino Nano with wrong FastLED example (which supports just 4 ws8212) and wondered why I'm not getting the whole panel to light up. After some sleep, I tried Adafruit example, fixed one broken data out-in wire in middle of panel and I got this:

So, playing video on this panel should be easy now, right?

First, I had to make a choice of platform to drive the panel. While my 10x10 panel with 100 leds needed just 300 bytes for single frame, I didn't want to have a video sending device wired to it. So, esp8266 was logical choice to provide network connectivity to the panel without usb connection (which we still need, but just for power).

At first, I took the Lolin Node MCU clone, which doesn't have 5V broken out (why?), and its VIN pin has a diode between USB 5V pin and VIN, and diode voltage drop is enough to make ws2812 dark all the time.

Switching to Weemos D1 mini did help there, but what to run on it? I found some examples that where too clever for me (for 8x8 panel, they use jpeg and just decode single 8x8 block to show it which won't work for my 10x10 panel).

After a bit of googling, it seems to me that

https://github.com/Aircoookie/WLED

project is somewhat of Tasmota for WS2812 on ESP8266, so I decided to use it. While it's not designed to support WS2812 matrix but simple stripes, it has UDP realtime control which enables it to send 302 byte UDP packet (300 bytes of RGB data and two byte header).

So I started writing scripts which are at https://github.com/dpavlin/WLED-video to first convert video to raw frames using something as simple as ff2rgb.sh:

dpavlin@nuc:/nuc/esp8266/WLED-video$ cat ff2rgb.sh

#!/bin/sh -xe

f=$1

test ! -d $f.rgb && mkdir $f.rgb || rm -v $f.rgb/*.png

ffmpeg -i $f -vf scale=10x10 $f.rgb/%03d.png

ls $f.rgb/*.png | xargs -i convert {} -rotate 180 -gamma 0.3 -depth 8 {}.rgb

To send frames I wrote simple send.pl script. I would have loved to be able to use bash udp support or some standard utility (like netcat or socat) to send frames, but null values in data didn't work well with shell pipes and I wasn't able to make it work.

I also figured out that I have to modify gamma values for my frames so that colors are somewhat more correct (I had flame video which had blue hues on it without gamma correction). This is somewhat strange because WLED does have gamma correction for colors turned on, but it doesn't help and turning it off also doesn't help. So, gamma correction in pre-processing it is...

And since I already had perl script to send UDP packets, I decided to open ffmpeg from it and make single script ff2wled.pl which sends video to panel like this:

dpavlin@nuc:/nuc/esp8266/WLED-video$ ./ff2wled.pl rick.gif

Was it all worth it? Honestly, no. The panel is small enough that video playback is really too much for such small resolution and it would be so much easier to buy ready-made panel with more leds. But, I did learn a few tricks with ffmpeg, and hopefully somebody else will benefit from this post.