I always loved graphs. For my monitoring needs I'm using munin so in last few days I was migrating one installation from machine behind DSL line to co-location (virtual) server. Using old munin server, I would occasionally get e-mail alerts about unavailable services, mostly because n2n (which we use to connect to nodes) forgot about node or dropped packets because of busy DSL line. In the process, I decided to jump ahead and install version 2.0-rc5 from Debian unstable. This blog post will try to describe my journey...

I started simply by installing new munin instance in new (virtual) machine. I decided that historic data is important, so I decided to move configuration and graphs over to new instance. Simply coping rrd files over didn't went all that well and resulted in dreaded This RRD was created on another architecture error. This was unfortunate but rrd files where so large, that transfer won't fit into 5 minute munin poll interval anyway, so I had to take different approach.

To keep all historical data and not loose any munin polls while I transfer them I decided to first configure new munin node to poll all clients (so new updates will be preserved) and while this is running copy over rrd files from old server. This involved editing all nodes (9 of them!) and Cluster SSH came as perfect solution to add additional allow ^192\.168\.0\.10$ lines in /etc/munin/munin-node.conf on all nodes.

Coping rrd files had to be done using rrdtool dump/restore and it had to be done over compressed ssh link due to slow DSL line. For that, small shell script came very handy:

#!/bin/sh -x

dir=/var/lib/munin/maxxo

via_ssh="ssh -o ControlPath=/tmp/ssh_sock -o ControlMaster=auto -o Compression=yes root@10.1.3.10"

if [ ! -e /tmp/ssh_sock ] ; then

$via_ssh read

fi

ls $dir/*.rrd | while read file

do

echo $file

rrdtool dump $file | $via_ssh rrdtool restore - $file --force-overwrite

done

You need to start it twice. First invocation will ask for password and open master ssh socket which next invocation will use for transfers of rrd files using compressed ssh link, without connection overhead for each file. We are talking about 4560 rrd files with total of over 250Mb, after all... Even with all this, it took hour and a half to transfer all that over, so setting up update of existing files was really required.

You might think that it's all, but unfortunately, it's not. Looking in /var/log/munin/munin-update.log I could see [FATAL] Socket read timed out to node. Terminating process.. Some of nodes required more time than default value provided by munin (30 sec) to respond with all data. It seems that ipmi plugins are notoriously snow to respond for example. To change server-side timeout, you have to pass --timeout 90 to munin-update utility. Unfortunately, in Debian you can't do that by modifying munin-cron invocation in /etc/cron.d/munin because it passes all parameters to munin-limit which doesn't have timeout option and dies on you (moral of the story: check cron e-mail while configuring cron jobs). In the end, I edited /usr/bin/munin-cron directly, changing one line:

/usr/share/munin/munin-update --timeout 90 $@ || exit 1This will probably break with next update, but this blog post will remind me to change it again :-)

There where also a few tweaks on munin-node plugins to make them work inside kvm. iostat_ios plugin from munin-plugins-extra didn't like virtio devices which have major number 254, same as LVM2 devices which it ignores. Following patch solved this problem:

diff --git a/cs-munin/etc/munin/plugins/iostat_ios b/cs-munin/etc/munin/plugins/iostat_ios

index 1380eb1..823df63 100755

--- a/cs-munin/etc/munin/plugins/iostat_ios

+++ b/cs-munin/etc/munin/plugins/iostat_ios

@@ -101,7 +101,7 @@ sub filter {

return 0 if ($major == 1); # RAM devices

return 0 if ($major == 9); # MD devices

return 0 if ($major == 58); # LVM devices

- return 0 if ($major == 254); # LVM2 devices

+ return 1 if ($major == 254); # LVM2 devices and KVM virtio

}

if(defined($tmpnam)) {

return 0 if ($tmpnam =~ /part\d+$/);

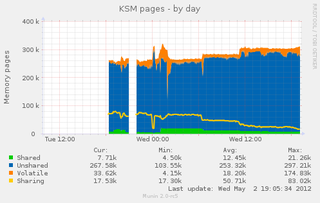

I also decided to use ksm which is enabled by following line in /etc/rc.local:

echo 1 > /sys/kernel/mm/ksm/runAnd of course, now I had to graph it with simple shell ksm munin plugin. Dropping sharing line on this graph makes me think that it wasn't really needed, but we'll see in few more days.

To track other kvm parameters, I used munin-libvirt-plugins which comes with it's own helper script munin-libvirt-plugins-detect which you have to run to enable plugin and generate configuration.

For a web server, I opted to use apache2 and libapache2-mod-fastcgi together with graph_strategy cgi and html_strategy cgi in /etc/munin/munin.conf mostly to save some performance on polling machine. To make it work, I had to copy /etc/munin/apache.conf into /etc/apache2/conf.d/munin and uncomment relevant fast-cgi lines inside. After that, dynamically generated html is available at http://munin.example.com/munin-cgi/ and if you ever run munin-html before, you will still get old (obsolete) html pages if you visit page directly.

Next step would probably be to get rrdcached up and running...

Update: If you 404 or 500 errors when generating cgi graphs, and you will have to add cgitmpdir /tmp/munin/ to /etc/munin/munin.conf.