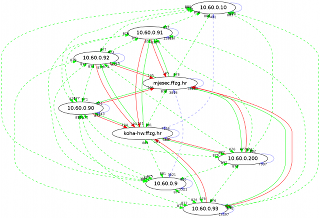

I wrote initial version of bak-git more than a month ago, and started using it to manage my share of Internet cloud. Currently, it's 16 hosts, some of them real hardware, some OpenVZ or LXC containers.

Since then, I implemented two new features in bak-git:

- lighttpd configuration for gitweb with ssl and auth (so you can easily review your changes)

- ability to do diff and revert on remote hosts

On central node, you create proxy configuration under new fake host _lib

dpavlin@klin:~/klin/backup$ cat _lib/etc/apt/apt.conf.d/02proxy

Acquire::http { Proxy "http://10.60.0.91:3142"; };

Then you can login into any other host and check:

dpavlin@mjesec:~$ bak diff _lib:/etc/apt/apt.conf.d/02proxyno output, so same as in central _lib configuration, but

dpavlin@opl:~$ bak diff _lib:/etc/apt/apt.conf.d/02proxy

--- opl/etc/apt/apt.conf.d/02proxy 1970-01-01 01:00:00.000000000 +0100

+++ _lib/etc/apt/apt.conf.d/02proxy 2010-03-18 23:22:55.000000000 +0100

@@ -0,0 +1 @@

+Acquire::http { Proxy "http://10.60.0.91:3142"; };

This seem like missing configuration. Let's install it (revert from shared configuration template _lib):

dpavlin@opl:~$ bak revert _lib:/etc/apt/apt.conf.d/02proxyHave in mind that this didn't commit this configuration change to bak-git, it just created file on local file system.

bak diff hostname:/path is more powerful than that. It can make diff of file which isn't tracked on remote host with local one, allowing easy comparison of any file on file-system to another file with same path on remote host. As a (useful) side-effect, file will be copied to central server, but not committed. You can choose to commit it later, or remove it from backup directory, but handy copy at diff time is nice to because it records your interest in that file.

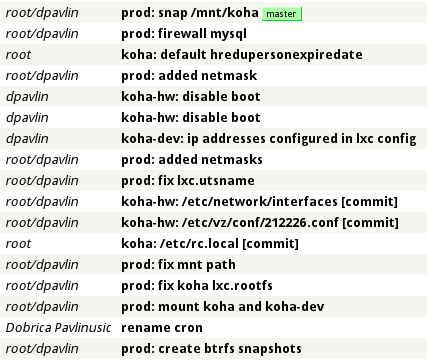

I also misused git's Author: field to track user which committed change:

Author: root/dpavlinThis means that I was using sudo to become root on hostname prod. To create more useful log in gitweb, I also prefixed messages with hostname: to create nicer output in gitweb:

I don't like inserting redundant information in message, but it's very useful to see author, hostname and message at a glance and if you want more details, you can always use bak log or bak changes on hosts or git directly in your backup directory:

dpavlin@klin:~/klin/backup$ git log --stat

commit 3ba2f2ebde044232983e0ea9ffdeb2afc0012cf9

Author: root/dpavlin <prod>

Date: Sat Mar 27 01:12:15 2010 +0100

prod: snap /mnt/koha

prod/etc/cron.d/btrfs-snap | 1 +

1 files changed, 1 insertions(+), 0 deletions(-)

commit 40779a5c13ee12462b3b50f7ea1ace2363facd58

Author: root/dpavlin <prod>

Date: Sat Mar 27 00:54:15 2010 +0100

prod: firewall mysql

prod/etc/rc.local | 18 ++++++++++++++++++

1 files changed, 18 insertions(+), 0 deletions(-)

commit 6a433b853c9da2817bc76afa2e35cc1ed360c590

Author: root <koha>

Date: Fri Mar 26 23:29:00 2010 +0100

koha: default hredupersonexpiredate

koha/etc/koha/koha-conf.xml | 2 ++

1 files changed, 2 insertions(+), 0 deletions(-)