If you are put in front of working radius server which you want to upgrade, but this is your first encounter with radius, following notes might be useful to get you started.

Goal is to to upgrade system and test to see if everything still works after upgrade.

radtest

First way to test radius is radtest which comes with freeradius and enables you to verify if login/password combination results in successful auth.

You have to ensure that you have 127.0.0.1 client in our case in /etc/freeradius/3.0/clients-local.conf file:

client 127.0.0.1 {

ipv4addr = 127.0.0.1

secret = testing123

shortname = test-localhost

}

Restart freeradius and test

# systemctl restart freeradius

# radtest username@example.com PASSword 127.0.0.1 0 testing123

Sent Access-Request Id 182 from 0.0.0.0:45618 to 127.0.0.1:1812 length 86

User-Name = "username@example.com"

User-Password = "PASSword"

NAS-IP-Address = 193.198.212.8

NAS-Port = 0

Message-Authenticator = 0x00

Cleartext-Password = "PASSword"

Received Access-Accept Id 182 from 127.0.0.1:1812 to 127.0.0.1:45618 length 115

Connect-Info = "NONE"

Configuration-Token = "djelatnik"

Callback-Number = "username@example.com"

Chargeable-User-Identity = 0x38343431636162353262323566356663643035613036373765343630333837383135653766376434

User-Name = "username@example.com"

# tail /var/log/freeradius/radius.log

Tue Dec 27 19:41:15 2022 : Info: rlm_ldap (ldap-aai): Opening additional connection (11), 1 of 31 pending slots used

Tue Dec 27 19:41:15 2022 : Auth: (9) Login OK: [user@example.com] (from client test-localhost port 0)

This will also test connection to LDAP in this case.

radsniff -x

To get dump of radius traffic on production server to stdout, use radsniff -x.

This is useful, but won't get you encrypted parts of EAP.

freeradius logging

To see all protocol decode from freeradius, you can run it with -X flag in terminal which will run it in foreground with debug output.

# freeradius -XIf you have ability to run isolated freeradius for testing, this is easiest way to see all configuration parsed (and warnings!) and decoded EAP traffic.

generating more verbose log file

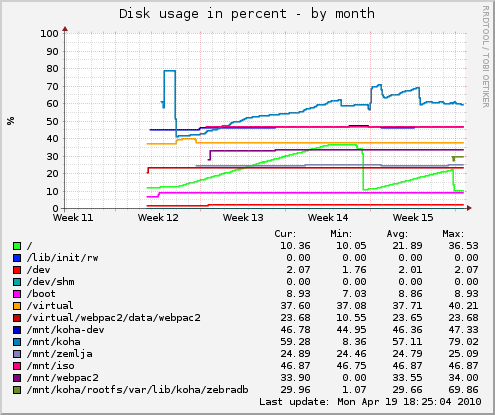

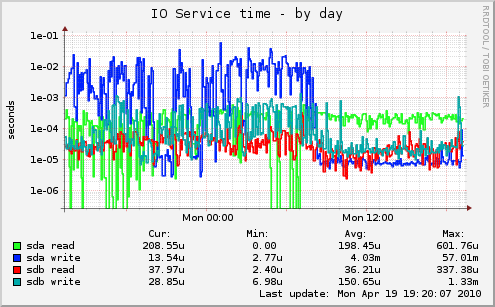

Adding -x to /etc/default/freeradius or to radius command-line will generate debug log in log file. Be mindful about disk space usage for additional logging! But to see enough debugging in logs to see which EAP type is unsupported like:

dpavlin@deenes:~/radius-tools$ grep 'unsupported EAP type' /var/log/freeradius/radius.log (27) eap-aai: Peer NAK'd asking for unsupported EAP type PEAP (25), skipping... (41) eap-aai: Peer NAK'd asking for unsupported EAP type PEAP (25), skipping... (82) eap-aai: Peer NAK'd asking for unsupported EAP type PEAP (25), skipping... (129) eap-aai: Peer NAK'd asking for unsupported EAP type PEAP (25), skipping... (142) eap-aai: Peer NAK'd asking for unsupported EAP type PEAP (25), skipping...you will need to use -xx (two times x) to get enough debugging log. Again, monitor disk usage carefully.

EAP radius testing using eapol_test from wpa_supplicant

To test EAP we need to build eapol_test tool from wpa_supplicant.

wget http://w1.fi/releases/wpa_supplicant-2.10.tar.gz cd wpa_supplicant-/wpa_supplicant $ cp defconfig .config $ vi .config CONFIG_EAPOL_TEST=y # install development libraries needed apt install libssl-dev libnl-3-dev libnl-genl-3-dev libnl-route-3-dev make eapol_test

EAP/TTLS

Now ne need configuration file for wpa_supplicant which tests EAP:

ctrl_interface=/var/run/wpa_supplicant

ap_scan=1

network={

ssid="eduroam"

proto=WPA2

key_mgmt=WPA-EAP

pairwise=CCMP

group=CCMP

eap=TTLS

anonymous_identity="anonymous@example.com"

phase2="auth=PAP"

identity="username@example.com"

password="PASSword"

}

Now we can test against our radius server (with optional certificate test):

# ./wpa_supplicant-2.10/wpa_supplicant/eapol_test -c ffzg.conf -s testing123and specifying your custom CA cert:

# ./wpa_supplicant-2.10/wpa_supplicant/eapol_test -c ffzg.conf -s testing123 -o /etc/freeradius/3.0/certs/fRcerts/server-cert.pemThis will generate a lot of output, but in radius log you should see

Tue Dec 27 20:00:33 2022 : Auth: (9) Login OK: [username@example.com] (from client test-localhost port 0 cli 02-00-00-00-00-01 via TLS tunnel) Tue Dec 27 20:00:33 2022 : Auth: (9) Login OK: [username@example.com] (from client test-localhost port 0 cli 02-00-00-00-00-01)

GTC

This seems like a part of tibial knowledge (passed to me by another sysadmin), but to make GTC work, change of default_eap_type to gtc under ttls and add gtc section:

ttls {

# ... rest of config...

default_eap_type = gtc

# ... rest of config...

}

gtc {

challenge = "Password: "

auth_type = LDAP

}

and changing wpa-supplicant configuration to:

CLONE dupli deenes:/home/dpavlin# cat eduroam-ttls-gtc.conf

ctrl_interface=/var/run/wpa_supplicant

ap_scan=1

network={

ssid="eduroam"

proto=WPA2

key_mgmt=WPA-EAP

pairwise=CCMP

group=CCMP

eap=TTLS

anonymous_identity="anonymous@example.com"

phase2="autheap=GTC"

identity="username@example.com"

password="PASSword"

}

PEAP

To make PEAP GTC work, I needed to add:

diff --git a/freeradius/3.0/mods-available/eap-aai b/freeradius/3.0/mods-available/eap-aai

index 245b7eb..6b7cefb 100644

--- a/freeradius/3.0/mods-available/eap-aai

+++ b/freeradius/3.0/mods-available/eap-aai

@@ -73,5 +73,11 @@ eap eap-aai {

auth_type = LDAP

}

+ # XXX 2023-01-06 dpavlin - peap

+ peap {

+ tls = tls-common

+ default_eap_type = gtc

+ virtual_server = "default"

+ }

}

which then can be tested with:

network={

ssid="wired"

key_mgmt=IEEE8021X

eap=PEAP

anonymous_identity="anonymous@example.com"

identity="username@example.com"

password="PASSword"

}