I had a interesting problem with conference name tags this week. I wanted to use free software stack to produce them, and it turned out that this required patching bash to properly parse CSV files and learning new pdf tricks to print multiple pdf pages on single sheet of paper, so I will try to document them here, so I won't have to discover it again in two years for next one...

For design, we decided to use Inkscape, great vector drawing program. And even better, it already included Inkscape Generator extension just for that purpose.

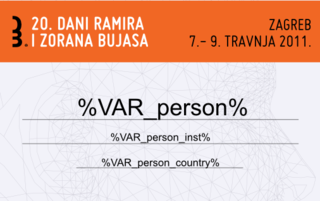

For design, we decided to use ISO 7810 ID-1 card size of 85.60 × 53.98 mm and included template variables required by extension as you can see on picture.

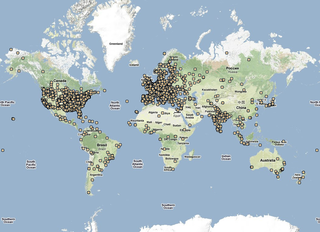

Data for conference participants ended up in Gnumeric, and where exported in CSV file. And that's where we hit the first road-block. Current version of ink-generate extension doesn't support more than one comma inside quoted field. However, Inkscape Generator extension home page included useful pointer to correct bash code to parse CSV files by Chris F.A. Johnson so I decided to import it into ink-generator git repository and replace CSV parser. Few patches later and I had working extension which produces 600+ pdf files on disk.

In the process, I learned that you can invoke Inkscape extensions from command line, which is nice for generating previews while you edit code:

./generator.sh --var-type=name --data-file=test.csv --format=pdf --dpi=90 --output='$HOME/generator-output/%VAR_id%.pdf' --extra-vars=" " --preview=true akred1-var.svg

If

--preview=true is removed, it will generate all files without GUI interaction, which is nice for automation.

To make it sorted by last name, we created fake id column padded with zeros.

Now we had to print them. While some printer drivers have option to print multiple pages, the one we where using decided to center each name tag requiring too much cutting each side of each name tag manually. This was obviously non-efficient, and I knew about psnup utility for PostScript, but I didn't know that there is pdfnup which is part of PDFjam (and in Debian package).

However, getting layout just right involved reading pdfpages package documentation, but this is what I ended up with:

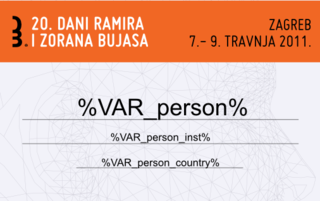

pdfnup --suffix nup --nup '2x5' --paper a4paper --no-landscape --noautoscale true --frame true --outfile nup.pdf -- ~/generator-output/*.pdf

This produces layout which you can see on the picture, nicely centered in the middle of the page (this is why I included fake grain background to show centering).

In the end, it didn't really worked out. Parsing CSV correctly (and supporting quotes inside quoted values) is a hard task in bash, and I had to admit that I don't really know how to fix it. With only a day to start of conference and no time to waste, I took my favorite language of choice, perl and wrote 60-line script which does same thing but uses Text::CSV perl module to parse data files.

There is something to be learned here: for a start language and good supporting libraries does matter. Second, sometimes you are better off starting from scratch. But that decision should be made only when you exhorted other options since fixing original extension would have benefit for wider community. There is a balance between scratching my own itch and common good which is tricky.

Recent Comments