I recently got big screen TV (big for my living room at least). It came with few HDMI ports and VGA, so next logical step was to connect computer to it. And of course, then I noticed that it would be really handy to have wireless keyboard and mouse to complete this nice setup. However, I also wanted to ssh over that networks, so I started examining how secure wireless keyboards are. tl;dr; summary: they are not secure.

I recently got big screen TV (big for my living room at least). It came with few HDMI ports and VGA, so next logical step was to connect computer to it. And of course, then I noticed that it would be really handy to have wireless keyboard and mouse to complete this nice setup. However, I also wanted to ssh over that networks, so I started examining how secure wireless keyboards are. tl;dr; summary: they are not secure.

First I asked for suggestions which wireless keyboard to buy. I have quite big fingers, so mini models just doesn't do it for me. I got suggestion to take a look at Logitech K400 and sure enough it seemed like good choice. One of first things that I noticed is that it supports 128-bit AES encryption. I started to have a good feeling about it, but I wanted to know more, so I hoped to Logitech Advanced 2.4 GHz Technology pdf and discovered that not all keys are encrypted.To quote documentation:

The encryption applies to all standard keyboard keys (a, s, d, f...) and modifiers (Shift, Ctrl, Alt...). The multimedia keys (Play, Pause, Mute...) that may be implemented in some keyboards are transmitted in clear text.How can I trust keyboard which doesn't encrypt all traffic? This got me thinking. Can I somehow verify that keys are encrypted? Is this wide-spread problem? Can I make mistake and broadcast my keystrokes to whole world?

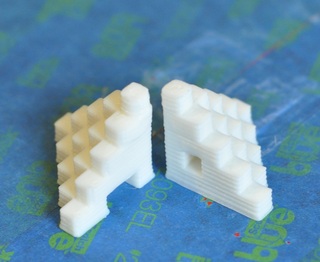

Sure I can. For older 27Mhz keyboards there is KeyKeriki v1.0 - 27MHz project which implement sniffer for it (video DeepSec 2009: Keykeriki: Universal Wireless Keyboard Sniffing For The Masses). But, Logitech is 2.4Ghz, so it's secure, right? Well, there is KeyKeriki v2.0 - 2.4GHz which does same for 2.4Ghz (video Keykeriki V2 - Practical Exploitation of Modern Wireless Devices [SIGINT10]). OK, Logitech does some kind of AES on top of that, but since it does transfer some keys unencrypted, and it's proprietary technology I can't really check that.

I also got suggestion to use bluetooth keyboard because it's secure. Well, quick search revealed Ubertooth One which basically defeats bluetooth protection with a bit of sniffing and a little brute force.

By this point, I was puzzled. Is there secure wireless keyboard with touchpad which I can buy? Something I can be sure that it encrypts all traffic as opposed to only some keys? Or is usb extension cable only real solution for me?